Reverse engineering Codex CLI to get GPT-5-Codex-Mini to draw me a pelican

Reverse engineering Codex CLI to get GPT-5-Codex-Mini to draw me a pelican

9th November 2025

OpenAI partially released a new model yesterday called GPT-5-Codex-Mini, which they describe as "a more compact and cost-efficient version of GPT-5-Codex". It’s currently only available via their Codex CLI tool and VS Code extension, with proper API access "coming soon". I decided to use Codex to reverse engineer the Codex CLI tool and give me the ability to prompt the new model directly.

I made a video talking through my progress and demonstrating the final results.

This is a little bit cheeky

OpenAI clearly don’t intend for people to access this model directly just yet. It’s available exclusively through Codex CLI which is a privileged application—it gets to access a special backend API endpoint that’s not publicly documented, and it uses a special authentication mechanism that bills usage directly to the user’s existing ChatGPT account.

I figured reverse-engineering that API directly would be somewhat impolite. But... Codex CLI is an open source project released under an Apache 2.0 license. How about upgrading that to let me run my own prompts through its existing API mechanisms instead?

This felt like a somewhat absurd loophole, and I couldn’t resist trying it out and seeing what happened.

Codex CLI is written in Rust

The openai/codex repository contains the source code for the Codex CLI tool, which OpenAI rewrote in Rust just a few months ago.

I don’t know much Rust at all.

I made my own clone on GitHub and checked it out locally:

git clone [email protected]:simonw/codex cd codex

Then I fired up Codex itself (in dangerous mode, because I like living dangerously):

codex --dangerously-bypass-approvals-and-sandbox

And ran this prompt:

Figure out how to build the rust version of this tool and then build it

This worked. It churned away for a bit and figured out how to build itself. This is a useful starting point for a project like this—in figuring out the compile step the coding agent gets seeded with a little bit of relevant information about the project, and if it can compile that means it can later partially test the code it is writing while it works.

Once the compile had succeeded I fed it the design for the new feature I wanted:

Add a new sub-command to the Rust tool called “codex prompt”

codex prompt “prompt goes here”—this runs the given prompt directly against the OpenAI API that Codex uses, with the same code path and authentication credentials as the rest of Codex.

codex prompt -m

“prompt goes here”—same again but lets you set the model codex prompt “prompt goes here” -s/--system “system prompt goes here”—runs with a custom system/developer message

codex prompt --models—this lists all available models that can be used with the “codex prompt” command

My design for this new sub-command is lifted directly from my own llm command.

Iterating on the code

Codex got to work. I’ve shared the full transcript here (using the tool I described here), but this TODO list it made itself is a useful summary of what it decided to do:

Updated Plan

└ ✔ Inspect CLI structure and relevant core utilities for sending prompts and listing models

✔ Implement new `codex prompt` subcommand (CLI plumbing + command handler)

✔ Format, lint, and test (`just fmt`, `just fix -p codex-cli`, `cargo test -p codex-cli`)

I like that it figured out the justfile in the repo and decided to use it to run formatting and linting commands without me needing to tell it to.

I tried running the first version of the code it wrote like this:

./target/debug/codex prompt 'Generate an SVG of a pelican riding a bicycle' -m gpt-5-codex-mini

... and it didn’t quite work. I got this:

(reasoning summary) **Seeking

(reasoning summary) instructions

(reasoning summary) and

(reasoning summary) sandbox

(reasoning summary) info

(reasoning summary) **

(reasoning summary) **Dec

(reasoning summary) iding

(reasoning summary) on

(reasoning summary) SVG

(reasoning summary) creation

(reasoning summary) approach

(reasoning summary) **

(reasoning summary) **Checking

(reasoning summary) current

(reasoning summary) directory

(reasoning summary) **

(reasoning summary) **Preparing

(reasoning summary) to

(reasoning summary) check

(reasoning summary) current

(reasoning summary) directory

(reasoning summary) **

I�m ready to help�what would you like me to do next?I�m ready to help�what would you like me to do next?

Token usage: total=2459 input=2374 cached_input=0 output=85 reasoning_output=64

Note that it DID think about SVG creation, but then decided it should look at the current directory. This isn’t what I want—it appeared to be running in Codex’s normal mode with a system prompt telling it to edit files on disk. I wanted it to respond to the prompt without acting as if it had a full workspace available to it.

I prompted it again, pasting in the broken output:

this did not seem to work—here is what happened—note that the reasoning summary comes out on a lot of lines when I would rather it streams out to stderr on the same line (flushing constantly)—and then despite the reasoning summary thinking about pelican on SVG it did not actually act on that and display the result, which is very surprising. [pasted content]

It churned for a bit more, but from the output it looked like it was still trying to include Codex-specific tools and workspace information. So I said:

Note that this new ’codex prompt’ command should NOT be able to execute any tools at all, and should not have to think about the workspace or sandbox—it should send a single prompt with no tools and then stream and display a response and then stop.

This got me a new build, but when I ran it:

Error: unexpected status 400 Bad Request: {"detail":"Instructions are not valid"}

My guess is that OpenAI’s custom backend API for Codex doesn’t like it if you attempt to call it without passing in the expected instructions. Fair enough!

Rather than paste that error in I got Codex to try the command itself, so it could see the error:

You try running this and see what happens: ./target/debug/codex prompt ’Generate an SVG of a pelican riding a bicycle’

Codex switched back to the default instructions. From its reply:

cli/src/prompt_cmd.rs#L1-L320now forces a tool-free request: we keep the workspace defaults, inject a lightweight system/developer message (“don’t run tools”), disable apply_patch/web search via config overrides, and build the outbound prompt as just that system span plus the user text.

I guess that will have to do. I asked for one more feature:

Add a --debug option to the codex prompt command which causes the full JSON request and response to be printed to stderr, plus the URL that is being accessed and the HTTP verb

... and we’re ready to try this thing out!

Notably I haven’t written a single line of Rust myself here and paid almost no attention to what it was actually doing. My main contribution was to run the binary every now and then to see if it was doing what I needed yet.

I’ve pushed the working code to a prompt-subcommand branch in my repo if you want to take a look and see how it all works.

Let’s draw some pelicans

With the final version of the code built, I drew some pelicans. Here’s the full terminal transcript, but here are some highlights.

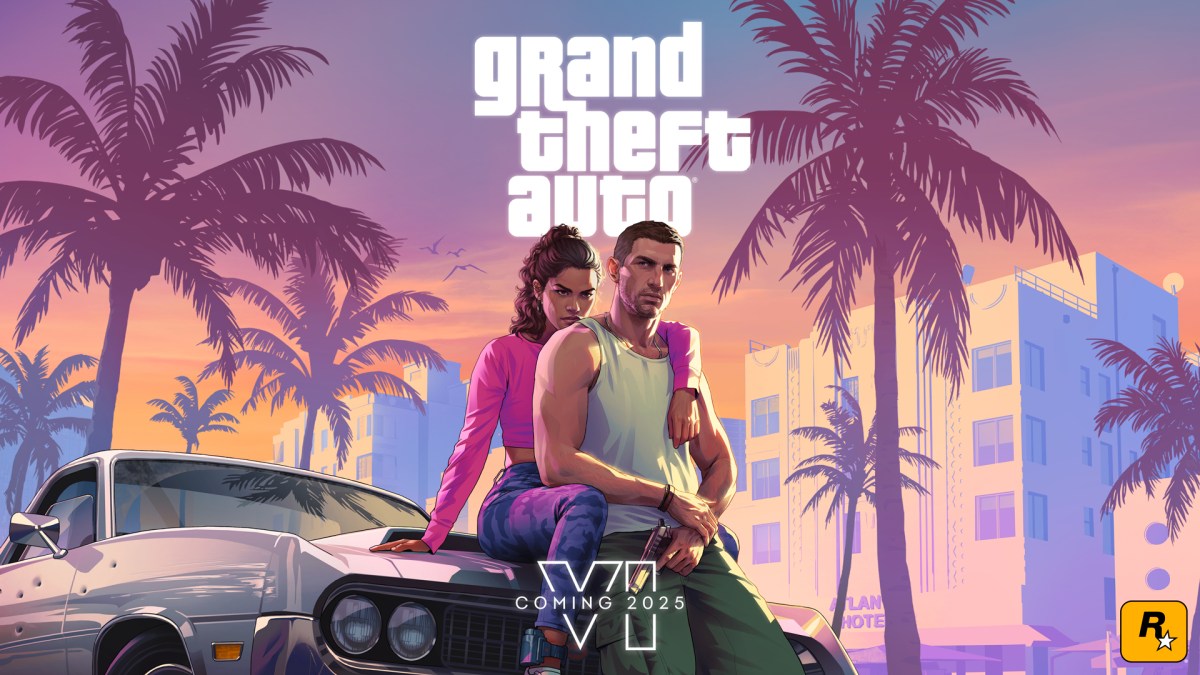

This is with the default GPT-5-Codex model:

./target/debug/codex prompt "Generate an SVG of a pelican riding a bicycle"

I pasted it into my tools.simonwillison.net/svg-render tool and got the following:

I ran it again for GPT-5:

./target/debug/codex prompt "Generate an SVG of a pelican riding a bicycle" -m gpt-5

And now the moment of truth... GPT-5 Codex Mini!

./target/debug/codex prompt "Generate an SVG of a pelican riding a bicycle" -m gpt-5-codex-mini

I don’t think I’ll be adding that one to my SVG drawing toolkit any time soon.

Bonus: the --debug option

I had Codex add a --debug option to help me see exactly what was going on.

./target/debug/codex prompt -m gpt-5-codex-mini "Generate an SVG of a pelican riding a bicycle" --debug

The output starts like this:

[codex prompt debug] POST https://chatgpt.com/backend-api/codex/responses

[codex prompt debug] Request JSON:

{

"model": "gpt-5-codex-mini",

"instructions": "You are Codex, based on GPT-5. You are running as a coding agent ...",

"input": [

{

"type": "message",

"role": "developer",

"content": [

{

"type": "input_text",

"text": "You are a helpful assistant. Respond directly to the user request without running tools or shell commands."

}

]

},

{

"type": "message",

"role": "user",

"content": [

{

"type": "input_text",

"text": "Generate an SVG of a pelican riding a bicycle"

}

]

}

],

"tools": [],

"tool_choice": "auto",

"parallel_tool_calls": false,

"reasoning": {

"summary": "auto"

},

"store": false,

"stream": true,

"include": [

"reasoning.encrypted_content"

],

"prompt_cache_key": "019a66bf-3e2c-7412-b05e-db9b90bbad6e"

}

This reveals that OpenAI’s private API endpoint for Codex CLI is https://chatgpt.com/backend-api/codex/responses.

Also interesting is how the "instructions" key (truncated above, full copy here) contains the default instructions, without which the API appears not to work—but it also shows that you can send a message with role="developer" in advance of your user prompt.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0